WTF is AGI? AI terms that every marketer should know

What’s the difference between machine learning and deep learning? You’re about to find out. This glossary can serve as a linguistic primer as you begin to navigate the world of AI.

Images created by Midjourney using the prompt: "An intelligent humanoid machine holding a dictionary, sci-fi digital art."

There’s been a lot of chatter about artificial intelligence (AI) lately and how it could either deliver a work-free paradise or escape our control and quickly escalate into a nightmare (the likes of which have been captured in countless Hollywood blockbusters like 2001: A Space Odyssey and the Terminator franchise). Regardless of where you happen to fall on the utopia-dystopia spectrum, one thing is by now abundantly clear: AI is here to stay – and it seems almost certain to transform civilization to a degree that many can scarcely imagine.

That being the case, it’s important for all of us – including marketers, whose industry is already feeling the effects of the AI revolution – to have at least a basic understanding of what AI is and how it works. That starts with understanding some of the language that’s spoken in this strange technological territory.

Here are some critical AI terms that you need to know [we will be updating this glossary on a regular basis, so we recommend checking in on it routinely]:

Advertisement

A/B testing: A form of randomized experimentation wherein two variants of a particular model, A and B, are tested by a human subject to determine which of them performs better than the other.

Algorithm: A set of instructions or rules used - often by a computer - to solve a set of problems, execute calculations or process data.

Artificial general intelligence (AGI, also sometimes referred to as Strong AI): An AI program with an intellectual ability that’s comparable to that of an average adult human. AGI, in other words, would hypothetically (we have yet to build one) be able to solve problems across a vast range of categories, just as a human brain can.

Artificial narrow intelligence (ANI, also sometimes referred to as Weak AI): An AI program built to perform a single, narrow function, such as playing chess or responding to customer service questions. All of the AI programs that have been developed to date fall into the category of ANI.

Artificial neural network (ANN): A synthetic system, roughly modeled on the architecture of organic brains, comprised of layers of artificial neurons.

Artificial superintelligence (ASI): First postulated by Oxford philosopher Nick Bostrum, “Superintelligence” is a theoretical intellect – artificial or organic – which is more advanced than that of humans. An ASI could have only a slightly higher IQ score than the average human being, or it could be vastly, unfathomably more intelligent, comparable to the difference in cognitive ability between an ant and Nobel Laureate Roger Penrose.

Association rule learning: A method of unsupervised and rule-based machine learning aimed at identifying commonalities or associations between variables in a dataset.

Automatic speech recognition (ASR – also known as computer speech recognition, speech-to-text or simply speech recognition): A machine’s capability to recognize human speech and then convert it into text. The iPhone dictation feature, for example, uses ASR.

Advertisement

Bayes’ theorem: Named after the 18th-century statistician Thomas Bayes, this theorem is a mathematical formula that can be used to determine what’s known as “conditional probability” – that is, the likelihood of a particular outcome based on one’s prior knowledge of a previous result that occurred in similar conditions.

Central processing unit (CPU): The most important component of a digital computer. The CPU – sometimes referred to as the “brain” or the “control center” of a computer – is the locus of every digital computing system’s memory, arithmetic capabilities (adding, subtracting, multiplying and dividing), and the orchestrator of its operating system. The CPU of modern computers is built upon a microprocessor.

Chatbot: An AI-based computer program that leverages natural language processing (NLP) to field customer service questions in automated verbal or text-based responses that simulate human speech.

ChatGPT: An AI-powered chatbot launched by San Francisco-based startup OpenAI in November of 2022. ChatGPT uses NLP to simulate human conversation. According to OpenAI’s website, ChatGPT can “answer follow-up questions, admit its mistakes, challenge incorrect premises and reject inappropriate requests.”

Convolutional neural network (CNN): A subset of artificial neural networks, commonly used in machine visual processing, which can enable an AI model to differentiate and analyze various components within an image.

Deep Blue: An AI program developed by IBM, the sole purpose of which is to play chess. In 1997, it made history by becoming the first intelligent machine to beat chess master Gary Kasparov in a chess match.

Deep learning (also known as deep reinforcement learning): An extension of machine learning based on the premise that machine learning models can be made more intelligent if they’re provided with vast quantities of data. Deep learning requires neural networks of at least three layers; the more layers it’s equipped with, the better its performance will be.

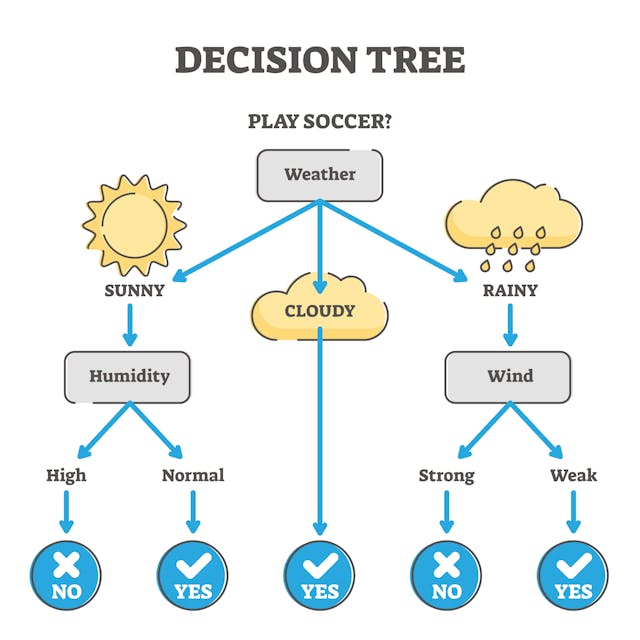

Decision tree: An imagistic illustration of the process of arriving at a decision, wherein each “branch” represents a particular course of action. Decision trees start at a “root node” (which consists of all the relevant data that’s being analyzed), branch off into “internal nodes (also known as “decision nodes”) and then terminate in “leaf nodes” (also known as “terminal nodes,” which represent all the possible outcomes of a given decision-making process).

Here’s a simple example of a decision tree rooted in the question of whether or not you should go outside to play soccer:

Machine learning: A subdiscipline of artificial intelligence that, using statistical formulas and data, enables computers to progressively improve their ability to carry out a particular task or set of tasks. Crucially, a computer leveraging machine learning does not need to be explicitly programmed to improve its performance in a particular manner – rather, it’s given access to data and is designed to “teach” itself. The results are often surprising to their human creators.

Microprocessor: A CPU for digital computing systems contained within a single integrated circuit (also known as a microchip, hence the prefix in the word “microprocessor”) or a small grouping of integrated circuits. Intel introduced the world's first microprocessor, dubbed the 4004, in 1971.

Natural language processing (NLP): A branch of artificial intelligence – that also blends elements of linguistics and computer science – aimed at enabling computers to understand verbal and written language in a manner that imitates the human brain’s language-processing capability.

Pattern recognition: An automated process whereby a computer is able to identify patterns within a set of data.

Prior probability (also sometimes referred to simply as a prior): A term used in the field of Bayesian statistics to refer to the assigned likelihood of an event before (prior to) additional (posterior) information necessitates the revision of that likelihood.

Reinforcement learning (RL): The process of teaching machine learning models to make optimal decisions within a dynamic environment. When using RL, a programmer will often present a machine learning model with a game-like situation in which one outcome is preferable to others. The machine then proceeds to experiment with different strategies and the programmer will “reinforce” the desired behavior with rewards and discourage other behaviors through penalties.

Advertisement

Semi-supervised learning: A branch of machine learning which, as the name suggests, blends elements of both supervised learning and unsupervised learning. Semi-supervised learning is based on the input of some labeled data and a higher quantity of unlabeled data, the goal being to teach an algorithm to categorize the latter into predetermined categories based on the former, and also to allow the algorithm to identify new patterns across the dataset. It is widely considered to be a kind of bridge, connecting the benefits of supervised learning with those of unsupervised learning.

Supervised learning: A branch of machine learning based on the input of clearly labeled data and aimed at training algorithms to recognize patterns and accurately label new data.

Turing test: A blinded experiment – invented by and named after 20th-century mathematician Alan Turing – where a human subject interacts with an artificially intelligent machine and asks it a series of questions. If the human interlocutor is unable to say definitively whether the responses are being generated by a human or an AI, the latter has passed the Turing Test.

Uncanny valley: A theoretical concept, first postulated by roboticist Masahiro Mori in 1970, which refers to an eerie, uncanny quality that will be perceived by a human being interacting with an artificial entity that closely (though imperfectly) resembles another human.

Unsupervised learning: A branch of machine learning which is based upon the input of unlabeled data. In contrast to supervised learning, unsupervised learning allows an algorithm to create its own rules for identifying patterns and categorizing data.

For more on the latest happening in tech, sign up for The Drum’s Inside the Metaverse weekly newsletter here.